As chair of HITSP, I am a non-voting facilitator who supports all stakeholders equally - large/small, open source/commercial, payers/providers. It's rare for me to personally champion an idea, but today's HITSP Board meeting has given me a cause to celebrate.

We reviewed a proposal, to be widely circulated among all stakeholders, which envisions a future for HITSP as the champion of "service oriented architecture (SOA)" for healthcare.

Before you declare that SOA is just another fad at the peak of the Gartner hype curve, realize that SOA is not about a specific technique such as web services, but about a way of connecting organizations using a set of principles that adapts easily to changes in technology.

The joy of a service oriented architecture is that it is loosely coupled - each participant does not need to understand the internal applications of trading partners. SOA exchanges typically do not require complex technical rules for interactions between senders and receivers.

Highlights of SOA include:

* Service "contract" - Services adhere to a communications agreement, as defined collectively by one or more service description documents

* Service abstraction - Beyond what is described in the service contract, services hide logic from the outside world

* Service reusability - Logic is divided into services with the intention of promoting reuse

* Service composability - Collections of services can be coordinated and assembled to form composite services

* Service autonomy – Services have control over the logic they encapsulate

* Service discoverability – Services are designed to be outwardly descriptive so that they can be found and assessed via available discovery mechanisms

To date, HITSP interoperability specifications have focused on the contents of the medical record and vocabularies, not the transaction rules among participating systems needing to exchange data. Although HITSP has developed specifications for transmission of data, it has not specified a common "envelope" for routing and delivery of healthcare information, leaving that to individual implementers.

The HITSP Board voted to establish a working group which will deliver a plan within 90 days to wrap all HITSP work so that it will plug and play with a service oriented architecture. The advantages of doing this

is that it provides strategic position for HITSP within national initiatives as the coordinator and harmonizer of SOA for healthcare. It also helps all the other national healthcare IT stakeholders by:

* Incorporating the lessons learned from the recent Nationwide Health Information Network demonstration

* Providing CCHIT with the necessary standards and framework for certifying Health Information Exchange

* Aligning HITSP work with industry trends including the Federal Health Architecture and IHE efforts

* Rationalizing the reuse and repurposing of HITSP interoperability specifications and their components

You'll hear much more about this effort over the next several months.

When a healthcare stakeholder asks questions such as "I need to exchange medication lists, discharge summaries, personal health records, glucometer data and quality data" the answer will be - HITSP has a well defined service component for that!

Tuesday, September 30, 2008

Monday, September 29, 2008

The Wall Street Crisis

During the decade I've been CIO, IT operating budgets have been 2% of my organization's total budget, which is typical for the healthcare industry.

During the same period, IT budgets for the financial services industry have averaged 10% or higher.

Since 1998, I've often been told that Healthcare IT needs to take a lesson from the financial folks about doing IT right.

Today's Boston Globe nicely summarizes the financial issues at the core of the crisis

1. Mortgage backed securities

2. "Stop loss" insurance on mortgage backed securities

3. The credit crunch

4. The Banking system

5. The Bailout

6. Recession v. Depression

Given the recent challenges of Lehman, Merrill, AIG, Washington Mutual, and others, you wonder just how effective the IT systems of these companies have been.

Of course they had great transactional systems, disaster recovery, infrastructure, and data warehouses.

However, did they have the business intelligence tools and dashboards that could alerted decision makers about the looming collapse of the industry?

Did the financial services industry have controls, risk analysis, or a memory of previous crisis - the Depression, the Japanese banking crisis, Enron/Worldcom? Was it greed, irrational expectations or too much data and not enough information that brought down these great institutions?

I'm sure many books will be written about the causes and those who are to blame.

One thing is for certain, In 2008, no one is going to tell me that healthcare IT should run as well as Lehman Brothers. I've even talked to folks in the industry who are rewriting their websites and resumes to remove historical references to their overwhelming historical successes in financial services IT.

I certainly feel for everyone in the financial services industry - the anxiety and stress must feel overwhelming. Given that every person in the US will be paying $3000 in tax dollars to rescue the industry if the bailout bill passes today, we're all going to accept responsibility for the IT systems and the management using them that led the unsinkable ship of the financial services industry into an iceberg at full speed.

During the same period, IT budgets for the financial services industry have averaged 10% or higher.

Since 1998, I've often been told that Healthcare IT needs to take a lesson from the financial folks about doing IT right.

Today's Boston Globe nicely summarizes the financial issues at the core of the crisis

1. Mortgage backed securities

2. "Stop loss" insurance on mortgage backed securities

3. The credit crunch

4. The Banking system

5. The Bailout

6. Recession v. Depression

Given the recent challenges of Lehman, Merrill, AIG, Washington Mutual, and others, you wonder just how effective the IT systems of these companies have been.

Of course they had great transactional systems, disaster recovery, infrastructure, and data warehouses.

However, did they have the business intelligence tools and dashboards that could alerted decision makers about the looming collapse of the industry?

Did the financial services industry have controls, risk analysis, or a memory of previous crisis - the Depression, the Japanese banking crisis, Enron/Worldcom? Was it greed, irrational expectations or too much data and not enough information that brought down these great institutions?

I'm sure many books will be written about the causes and those who are to blame.

One thing is for certain, In 2008, no one is going to tell me that healthcare IT should run as well as Lehman Brothers. I've even talked to folks in the industry who are rewriting their websites and resumes to remove historical references to their overwhelming historical successes in financial services IT.

I certainly feel for everyone in the financial services industry - the anxiety and stress must feel overwhelming. Given that every person in the US will be paying $3000 in tax dollars to rescue the industry if the bailout bill passes today, we're all going to accept responsibility for the IT systems and the management using them that led the unsinkable ship of the financial services industry into an iceberg at full speed.

Friday, September 26, 2008

Cool Technology of the Week

You may have seen the recent American Express Commercial featuring Jim Henson, Ellen DeGeneres and others which highlights "The Members Project", a $2.5 million grant program for innovative, out of the box ideas.

The funds to be awarded are

* $1,500,000 for the winning project

* $500,000 for the 2nd place project

* $300,000 for the 3rd place project

* $100,000 each for the two remaining finalist projects

One Project, New Affordable Cancer treatments, was submitted by a group from the Boston healthcare community. It's a cool idea.

Cancer incidence is rising at an alarming rate all over the world. As many with cancer know, treatments are often toxic, marginally effective, and expensive. For patients without health insurance, here and in the developing world, many newer treatments are simply too expensive.

And yet, there may exist today new, affordable and effective treatments for cancer using combinations of generic drugs and substances that were never patented. To be truly useful to patients, one needs to conduct clinical trials with these substances to determine the proper combinations and doses for effective therapy. Pharmaceutical companies will not invest money in such trials, since the profit potential with non-patentable or patent expired drugs is small.

GlobalCures was established last year as a non-profit medical research organization to address this problem. GlobalCures’ outstanding scientific team has identified many promising treatments available immediately to test in clinical trials.

The GlobalCures idea was posted under the title: New, Affordable Treatments with Existing Drugs . After the first round, thanks to the overwhelming support of voters and the review panel, the project is in the top 25 (out of 1190 projects posted).

If you're interested, you can help in two ways

1. If you have an Amex card, you can VOTE for the project by clicking New, Affordable Treatments with Existing Drugs (you will need to log in on the top right corner of the page)

2. Help us spread the word about the idea by posting to your blog, facebook, website etc.

The Idea:

The idea is to rapidly develop affordable, new therapies for major diseases by conducting clinical trials with combinations of generic or unpatented substances. Pharmaceutical companies will not conduct such trials, since they will not be able to recover their investments. Without evidence from such trials, doctors will be reluctant to prescribe such medicines and health insurance will not cover their cost. We estimate this idea will reduce suffering and save millions of lives within a few years.

The Problem:

Most laboratory research that generates ideas for promising therapies is funded by the government. Pharmaceutical companies then conduct clinical trials to get FDA approval. Since clinical trials are expensive, only lab work with patent protection is singled out by commercial enterprises for further investment. This scenario has left behind untapped opportunities: potential therapies based on substances that were never patented or generic drugs that could be used for new indications.

A cool idea that could save lives by better using technology we already have!

The funds to be awarded are

* $1,500,000 for the winning project

* $500,000 for the 2nd place project

* $300,000 for the 3rd place project

* $100,000 each for the two remaining finalist projects

One Project, New Affordable Cancer treatments, was submitted by a group from the Boston healthcare community. It's a cool idea.

Cancer incidence is rising at an alarming rate all over the world. As many with cancer know, treatments are often toxic, marginally effective, and expensive. For patients without health insurance, here and in the developing world, many newer treatments are simply too expensive.

And yet, there may exist today new, affordable and effective treatments for cancer using combinations of generic drugs and substances that were never patented. To be truly useful to patients, one needs to conduct clinical trials with these substances to determine the proper combinations and doses for effective therapy. Pharmaceutical companies will not invest money in such trials, since the profit potential with non-patentable or patent expired drugs is small.

GlobalCures was established last year as a non-profit medical research organization to address this problem. GlobalCures’ outstanding scientific team has identified many promising treatments available immediately to test in clinical trials.

The GlobalCures idea was posted under the title: New, Affordable Treatments with Existing Drugs . After the first round, thanks to the overwhelming support of voters and the review panel, the project is in the top 25 (out of 1190 projects posted).

If you're interested, you can help in two ways

1. If you have an Amex card, you can VOTE for the project by clicking New, Affordable Treatments with Existing Drugs (you will need to log in on the top right corner of the page)

2. Help us spread the word about the idea by posting to your blog, facebook, website etc.

The Idea:

The idea is to rapidly develop affordable, new therapies for major diseases by conducting clinical trials with combinations of generic or unpatented substances. Pharmaceutical companies will not conduct such trials, since they will not be able to recover their investments. Without evidence from such trials, doctors will be reluctant to prescribe such medicines and health insurance will not cover their cost. We estimate this idea will reduce suffering and save millions of lives within a few years.

The Problem:

Most laboratory research that generates ideas for promising therapies is funded by the government. Pharmaceutical companies then conduct clinical trials to get FDA approval. Since clinical trials are expensive, only lab work with patent protection is singled out by commercial enterprises for further investment. This scenario has left behind untapped opportunities: potential therapies based on substances that were never patented or generic drugs that could be used for new indications.

A cool idea that could save lives by better using technology we already have!

Thursday, September 25, 2008

Cycling in the Suburbs of Boston

Outdoor activities in New England are a function of the seasons. It's challenging to cycle in ice and snow. Kayaking in 40 degree water can be deadly. Climbing frozen rock that is not yet quite thick enough for ice climbing is dicey.

Outdoor activities in New England are a function of the seasons. It's challenging to cycle in ice and snow. Kayaking in 40 degree water can be deadly. Climbing frozen rock that is not yet quite thick enough for ice climbing is dicey.Thus, my outdoor activities are timed to maximize the best of each season and safety:

Cycling - April 1 to December 1 (dry roads)

Kayaking - May 1 to October 1 (water temperature above 50F)

Rock Climbing - June 1 to October 1 (warm, dry rock)

Ice Climbing - January 1 to March 1 (cold, solid ice)

Winter Mountaineering - December 21 to March 21 (solid snow pack)

Nordic skiing - December 1 to April 1 (reasonable snow cover)

I've blogged about many of these activities, but I've not written about cycling in the Boston area.

For folks from bike friendly cities like Portland, Oregon, you should know that cycling in Boston is a death sport - bad drivers on poorly maintained narrow roads. In the past decade, none of my friends have been injured while rock climbing. Several of my friends have been injured and one has been killed while cycling in Boston.

On the scale of Morts, cycling is probably about the most dangerous activity I do.

How do I mitigate risk? I cycle in Dover, Massachusetts where the roads are great, traffic is light, and the navigation is easy.

Here's a list of my favorite rides.

I pass great places like Cochrane Falls (picture above), the Charles River along South Street, Noanet Woodlands, Lookout Farm, Broadmoor Audubon Reserve, and some of the most scenic farmland inside the 495 loop.

I ride a Trek Hybrid FX 7.5 which is a road bike with 32mm hardened tires. This means I can cycle 20 miles to a great wilderness area and then cycle a few miles on single track trails to truly spiritual places.

For example, today after the work day, I cycled trails through Noanet Woodlands past a series of old Mill ponds and played my Japanese flute in harmony with the call of the Northern Saw-Whet owl at sunset.

Cycling in Dover, South Natick, Needham, and Wellesley can be safe and provide solace for the soul. I highly recommend cycling in the more rural suburbs of Boston before the ice and snow make riding a high risk adventure.

Wednesday, September 24, 2008

I've Got the Cold Calling Blues

Readers: An important update! I just exchanged email with Elizabeth Cutler, Group Vice President, US Conferences at IDC. IDC is not having a 2009 conference IT Expo/CIO Summit, so the calls I've been receiving are a scam. That explains the reason they would not email me any materials about the conference. I have not experienced cold calling scams as a CIO before. My apologies to IDC. When I receive my next cold call from the company pretending to be IDC, I'll play along and forward the info to Elizabeth. Life as a CIO is never boring!

Today in the middle of a meeting, I received my 5th cold call from IDC trying to sell me a registration to the 2009 IDC IT Expo and CIO Summit

I'm always kind to cold callers, and I explained that I know it's their job to sell me products and services I have not specifically solicited and do not want to buy.

In this case, I explained that I travel 200,000-400,000 miles per year and keynote all over the world. My goal in 2009 is to travel less, so I will not be attending any IDC conferences which require travel.

I also said that I would be happy to read about their conference and circulate it to my peers. However, this particular IDC marketing campaign seems to prohibit email communications. I've told the callers 5 times to email me an overview and they've explained that they cannot. Must be part of the sales pitch.

As a society, we have declared unwanted email to be Spam and various states and localities have tried to restrict the flow of spam with legislation.

At what point will cold calling a business to the sell the CIO an unsolicited product or service constitute telephone Spam or even harassment?

In the case of IDC, they have somehow obtained my private, unpublished office number that only my assistant and direct reports are supposed to know.

My phone rings about once a month, so I always answer it, assuming its an urgent call from someone close to me.

Today, I replaced my standard desk phone with a caller ID enabled phone so that I can screen unwanted sales calls. This strategy has been very effective at home, where my unlisted number is called several times a day by

The Boston Museum of Fine Arts

The Boston Ballet

The Massachusetts Fraternal Order of Police

The Boston Symphony Orchestra

Marketing and Survey organizations

The Unknown Caller (whoever that is, 8am on a Sunday morning is popular)

They never leave a message.

I have politely asked that my name be removed from all future fund raising lists and I am on the do not call list. Unfortunately, I made small contributions years ago to some of these organizations, which technically gives them the right to call because of a pre-existing business relationship.

Here is my action plan:

1. Sign up for every do not call list, do not mail list, do not send catalog list etc.

2. Put an embargo on donations to any unsolicited caller or visitor to my home.

3. Create an department wide ban on sales to any company making unsolicited calls to any IT staff member at BIDMC or Harvard

And of course, my blog can serve as a public wall of shame for these cold calling companies. I'll ask my staff to send me lists of company names for publication. I see that WallofShame.org is available from GoDaddy.com

CIOs are very busy people. They are providing complex services to demanding organizations and are given limited resources. They last thing they want is

"Hi, I'm Bob and I need to tell you about the best widget ever from xyz.com..."

when they are in the middle of a meeting, negotiating a contract, or writing a strategic plan.

As I tell all my vendors - I'm a voracious reader and when a new product is announced or a new need arises, I'll call you. Until then, tell your cold calling salesman to chill!

Today in the middle of a meeting, I received my 5th cold call from IDC trying to sell me a registration to the 2009 IDC IT Expo and CIO Summit

I'm always kind to cold callers, and I explained that I know it's their job to sell me products and services I have not specifically solicited and do not want to buy.

In this case, I explained that I travel 200,000-400,000 miles per year and keynote all over the world. My goal in 2009 is to travel less, so I will not be attending any IDC conferences which require travel.

I also said that I would be happy to read about their conference and circulate it to my peers. However, this particular IDC marketing campaign seems to prohibit email communications. I've told the callers 5 times to email me an overview and they've explained that they cannot. Must be part of the sales pitch.

As a society, we have declared unwanted email to be Spam and various states and localities have tried to restrict the flow of spam with legislation.

At what point will cold calling a business to the sell the CIO an unsolicited product or service constitute telephone Spam or even harassment?

In the case of IDC, they have somehow obtained my private, unpublished office number that only my assistant and direct reports are supposed to know.

My phone rings about once a month, so I always answer it, assuming its an urgent call from someone close to me.

Today, I replaced my standard desk phone with a caller ID enabled phone so that I can screen unwanted sales calls. This strategy has been very effective at home, where my unlisted number is called several times a day by

The Boston Museum of Fine Arts

The Boston Ballet

The Massachusetts Fraternal Order of Police

The Boston Symphony Orchestra

Marketing and Survey organizations

The Unknown Caller (whoever that is, 8am on a Sunday morning is popular)

They never leave a message.

I have politely asked that my name be removed from all future fund raising lists and I am on the do not call list. Unfortunately, I made small contributions years ago to some of these organizations, which technically gives them the right to call because of a pre-existing business relationship.

Here is my action plan:

1. Sign up for every do not call list, do not mail list, do not send catalog list etc.

2. Put an embargo on donations to any unsolicited caller or visitor to my home.

3. Create an department wide ban on sales to any company making unsolicited calls to any IT staff member at BIDMC or Harvard

And of course, my blog can serve as a public wall of shame for these cold calling companies. I'll ask my staff to send me lists of company names for publication. I see that WallofShame.org is available from GoDaddy.com

CIOs are very busy people. They are providing complex services to demanding organizations and are given limited resources. They last thing they want is

"Hi, I'm Bob and I need to tell you about the best widget ever from xyz.com..."

when they are in the middle of a meeting, negotiating a contract, or writing a strategic plan.

As I tell all my vendors - I'm a voracious reader and when a new product is announced or a new need arises, I'll call you. Until then, tell your cold calling salesman to chill!

Tuesday, September 23, 2008

Maintaining an Agile IT Organization

I tell my managers that their professional lives will be like a rotating lighthouse. The beam will pass each part of the landscape and sometimes you'll find yourself and your group in the beam.

As strategic plans change, compliance demands arise, and board level priorities create highly visible projects, each team will feel the spotlight and may struggle with timelines and resources. The beam may focus for days or even months on a particular group.

Since all IT projects are a function of Time, Scope and Resources , when the lighthouse focuses on a group, I'm often asked about increasing FTEs. Getting new positions approved, especially in this economy, is very challenging. I go through an internal due diligence process following the Strategy, Structure, Staffing, and Processes approach I use for all IT management.

Here's what I do:

1. Strategy - When IT groups are created and position descriptions written, it's generally in response to a strategic need of the organization or mission critical projects. Strategies change and projects end, so it is important to revisit each part of the organization episodically to ensure it is still aligned with the strategic needs of the organization. Imagine an application group created at the peak of client/server technology. When the spotlight shines on such a group to deliver web-based applications, it may be that the group was never designed to be an agile web delivery department. Thus, I look at the strategy of the organization, the current state of technology in the marketplace/in the community and, the level of customer demand. We can then re-examine the assumptions that were used to charter the group and its positions. For example, 2 years ago, the Peoplesoft team faced growing demands for application functionality and high levels of customer service. As chartered, our Peoplesoft team was a technology group without staff devoted to workflow analysis, subject matter expertise, and proactive alignment of new Peoplesoft functionality with customer needs. We re-chartered the group as a customer facing, business analyst driven, service organization backed by a world class technology team. Given the visibility of our financial projects at that time, the organization was willing to fund expansion of the team.

2. Structure - it may be that the team is not structured properly to deliver the level of service needed by the customers. Recently, I worked with my Harvard Media Services team to align job descriptions and hours worked with customer demand. This restructuring was entirely data driven and demonstrated that in order to meet evolving customer expectations we needed one person to work 4 ten hour days, four people to work 5 eight hour days, and one person to serve as a line supervisor, scheduling everyone and communicating to customers. The end result was a minimal increase in expense but a complete realignment of our organizational structure with the current needs of the enterprise.

3. Staffing - it may be that staff skill sets are perfect for their job descriptions as originally written, but they are no longer appropriate for the current state of technology. Training is critically important to maintain an agile IT organization so that staff can grow as technology grows. Based on expertise, levels of training, and capacity to evolve, staff in a group may be promoted or reassigned to best align them with the current strategy and structure.

4. Processes - it may be that customer service issues are related to less than optimal communication or lack of a consistent service process. In my blog about Verizon, a few cents spent on a sticker telling customers to call for router activation would markedly improve the customer experience. When the spotlight focuses on a group, I make sure we have optimized and documented our processes to serve the needs of customers.

CIOs should not assume their organization will be static. Technology changes rapidly and customer demands continue to grow. A healthy re-examination of each part of the organization to ensure strategy, structure, staffing and process are optimized will ensure an agile IT organization that grows and thrives over time. When the lighthouse beam shines on your group, welcome it as an opportunity to renew!

As strategic plans change, compliance demands arise, and board level priorities create highly visible projects, each team will feel the spotlight and may struggle with timelines and resources. The beam may focus for days or even months on a particular group.

Since all IT projects are a function of Time, Scope and Resources , when the lighthouse focuses on a group, I'm often asked about increasing FTEs. Getting new positions approved, especially in this economy, is very challenging. I go through an internal due diligence process following the Strategy, Structure, Staffing, and Processes approach I use for all IT management.

Here's what I do:

1. Strategy - When IT groups are created and position descriptions written, it's generally in response to a strategic need of the organization or mission critical projects. Strategies change and projects end, so it is important to revisit each part of the organization episodically to ensure it is still aligned with the strategic needs of the organization. Imagine an application group created at the peak of client/server technology. When the spotlight shines on such a group to deliver web-based applications, it may be that the group was never designed to be an agile web delivery department. Thus, I look at the strategy of the organization, the current state of technology in the marketplace/in the community and, the level of customer demand. We can then re-examine the assumptions that were used to charter the group and its positions. For example, 2 years ago, the Peoplesoft team faced growing demands for application functionality and high levels of customer service. As chartered, our Peoplesoft team was a technology group without staff devoted to workflow analysis, subject matter expertise, and proactive alignment of new Peoplesoft functionality with customer needs. We re-chartered the group as a customer facing, business analyst driven, service organization backed by a world class technology team. Given the visibility of our financial projects at that time, the organization was willing to fund expansion of the team.

2. Structure - it may be that the team is not structured properly to deliver the level of service needed by the customers. Recently, I worked with my Harvard Media Services team to align job descriptions and hours worked with customer demand. This restructuring was entirely data driven and demonstrated that in order to meet evolving customer expectations we needed one person to work 4 ten hour days, four people to work 5 eight hour days, and one person to serve as a line supervisor, scheduling everyone and communicating to customers. The end result was a minimal increase in expense but a complete realignment of our organizational structure with the current needs of the enterprise.

3. Staffing - it may be that staff skill sets are perfect for their job descriptions as originally written, but they are no longer appropriate for the current state of technology. Training is critically important to maintain an agile IT organization so that staff can grow as technology grows. Based on expertise, levels of training, and capacity to evolve, staff in a group may be promoted or reassigned to best align them with the current strategy and structure.

4. Processes - it may be that customer service issues are related to less than optimal communication or lack of a consistent service process. In my blog about Verizon, a few cents spent on a sticker telling customers to call for router activation would markedly improve the customer experience. When the spotlight focuses on a group, I make sure we have optimized and documented our processes to serve the needs of customers.

CIOs should not assume their organization will be static. Technology changes rapidly and customer demands continue to grow. A healthy re-examination of each part of the organization to ensure strategy, structure, staffing and process are optimized will ensure an agile IT organization that grows and thrives over time. When the lighthouse beam shines on your group, welcome it as an opportunity to renew!

Monday, September 22, 2008

When Technology Gets Too Complex

At home, my family relies on me for tech support. When a home requires an MIT trained CIO to keep it running, you know that technology has gotten too complex for the consumer. Here's the story.

I have Verizon FIOS at home. Despite a rocky start up it's been a fantastic service, offering me consistent 20 megabit/second download speeds.

Verizon has partnered with ActionTec to provide a wireless router called the MI424-WR to home users.

As one of the first FIOS subscribers, I received Hardware Revision A of this device.

Verizon automatically pushes firmware upgrades to their FIOS customers and on January 3, 2008, my ActionTec router received the 4.0.16.1.56.0.10.7 upgrade.

Since that point my home wireless connections have become unstable, DHCP leases cannot be renewed, and my family has made a ritual of rebooting the router several times a day.

Our household echoed with commentary that was like a scene from the Waltons. Goodnight, John Boy. Mary Ellen, can you reboot the router?

I searched the web and found hundreds of similar stories. Each one contained a "fix" that sounded more like voodoo than technology.

Hardcode the wireless channel to 11 and it will solve the problem.

Begin DHCP IP assignment at 192.168.1.150 and it will solve the problem.

Limit wireless to 802.11g only and it will solve the problem.

I reset the router to factory defaults and tried each one of these solutions. Since each required a router reboot, it appeared to solve the problem temporarily but in the end the router was still unstable.

My comprehensive review of all the blogs, forums, and user sob stories all over the web suggested one final solution. At this point, ActionTec has redesigned the MI424-WR four times and the new circuit board, hardware Revision D, contains completely different wireless networking chips

Interesting that ActionTec has redesigned the device to address some technical issues, but Verizon does not have a customer notification or router replacement program. It also seems strange that one set of firmware revisions could work well on 4 different hardware revisions.

I called Verizon and spoke to a very competent and helpful person. I explained the history of my problem and the various attempts I'd made to solve it. I requested an exchange of my Model A router for a Model D router. She checked with management and agreed to the exchange. She did note that Verizon provides a wireless router but does not support wireless - it's a use at your own risk service.

Two days later the Model D router arrived and it was clearly a major technology change. The form factor of the device was half the size of the Model A.

I connected the Model D router and could not connect to the internet - wired or wirelessly.

As a CIO, I predicted that the Verizon uses MAC address filtering to prevent rogue connections to FIOS. Thus, Verizon would have to activate the MAC address of my new router in order for it to work.

There was no documentation in the router package or user manual that suggested this step, it was just my CIO intuition.

I called the automated Verizon FIOS service number and it identified me as a customer who recently received a new router. The system then offered me the option of activating my new router, which I did. Internet connectivity was restored in 90 seconds.

Dumb question - if this is required and expected of all consumers, then why not put a big label on the router - please call to activate?

I then began setting up wireless connections. The manual that accompanied the router had a MAC address and WEP key printed on it for my router, so I used that information in my laptop setup. No luck.

I then logged into the router via a wired connection and checked the MAC address and WEP key assigned to the device - they were completely different than those provided in the printed documentation.

I reconfigured my Macbook Air, my wife's Macbook Pro, my daughter's iMac, and suddenly everything was working.

A week has passed without a router reboot. After 9 months of struggle, the problem is resolved.

The bottomline of all of this is that Verizon did a great job getting me the router I needed, but it took a CIO with 25 years of technology troubleshooting experience to diagnose and repair the problem. Clearly, the combination of Verizon's policy to not provide full support for wireless, the bleeding edge technology provided by ActionTec, and the lack of change management caused by numerous hardware and firmware upgrades is too much complexity for the average consumer to deal with.

Between the HP printer woes of early September and this Verizon/Actiontec issue, my family is convinced that every modern household needs its own CIO. Now you know why Geek Squad and similar home technology consulting services are likely to be a growing business.

I have Verizon FIOS at home. Despite a rocky start up it's been a fantastic service, offering me consistent 20 megabit/second download speeds.

Verizon has partnered with ActionTec to provide a wireless router called the MI424-WR to home users.

As one of the first FIOS subscribers, I received Hardware Revision A of this device.

Verizon automatically pushes firmware upgrades to their FIOS customers and on January 3, 2008, my ActionTec router received the 4.0.16.1.56.0.10.7 upgrade.

Since that point my home wireless connections have become unstable, DHCP leases cannot be renewed, and my family has made a ritual of rebooting the router several times a day.

Our household echoed with commentary that was like a scene from the Waltons. Goodnight, John Boy. Mary Ellen, can you reboot the router?

I searched the web and found hundreds of similar stories. Each one contained a "fix" that sounded more like voodoo than technology.

Hardcode the wireless channel to 11 and it will solve the problem.

Begin DHCP IP assignment at 192.168.1.150 and it will solve the problem.

Limit wireless to 802.11g only and it will solve the problem.

I reset the router to factory defaults and tried each one of these solutions. Since each required a router reboot, it appeared to solve the problem temporarily but in the end the router was still unstable.

My comprehensive review of all the blogs, forums, and user sob stories all over the web suggested one final solution. At this point, ActionTec has redesigned the MI424-WR four times and the new circuit board, hardware Revision D, contains completely different wireless networking chips

Interesting that ActionTec has redesigned the device to address some technical issues, but Verizon does not have a customer notification or router replacement program. It also seems strange that one set of firmware revisions could work well on 4 different hardware revisions.

I called Verizon and spoke to a very competent and helpful person. I explained the history of my problem and the various attempts I'd made to solve it. I requested an exchange of my Model A router for a Model D router. She checked with management and agreed to the exchange. She did note that Verizon provides a wireless router but does not support wireless - it's a use at your own risk service.

Two days later the Model D router arrived and it was clearly a major technology change. The form factor of the device was half the size of the Model A.

I connected the Model D router and could not connect to the internet - wired or wirelessly.

As a CIO, I predicted that the Verizon uses MAC address filtering to prevent rogue connections to FIOS. Thus, Verizon would have to activate the MAC address of my new router in order for it to work.

There was no documentation in the router package or user manual that suggested this step, it was just my CIO intuition.

I called the automated Verizon FIOS service number and it identified me as a customer who recently received a new router. The system then offered me the option of activating my new router, which I did. Internet connectivity was restored in 90 seconds.

Dumb question - if this is required and expected of all consumers, then why not put a big label on the router - please call to activate?

I then began setting up wireless connections. The manual that accompanied the router had a MAC address and WEP key printed on it for my router, so I used that information in my laptop setup. No luck.

I then logged into the router via a wired connection and checked the MAC address and WEP key assigned to the device - they were completely different than those provided in the printed documentation.

I reconfigured my Macbook Air, my wife's Macbook Pro, my daughter's iMac, and suddenly everything was working.

A week has passed without a router reboot. After 9 months of struggle, the problem is resolved.

The bottomline of all of this is that Verizon did a great job getting me the router I needed, but it took a CIO with 25 years of technology troubleshooting experience to diagnose and repair the problem. Clearly, the combination of Verizon's policy to not provide full support for wireless, the bleeding edge technology provided by ActionTec, and the lack of change management caused by numerous hardware and firmware upgrades is too much complexity for the average consumer to deal with.

Between the HP printer woes of early September and this Verizon/Actiontec issue, my family is convinced that every modern household needs its own CIO. Now you know why Geek Squad and similar home technology consulting services are likely to be a growing business.

Friday, September 19, 2008

Cool Technology of the Week

I work in the Longwood Medical Area of Boston. 150 years ago, the entire area was fens and cow pastures. Now it includes Beth Israel Deaconess, Harvard Medical School, Brigham and Women's, Dana Farber, Joslin, Merck, and millions of square feet of research buildings. It also includes all the cars of the folks who work in these institutions, commuting on a two lane former cow path called Longwood Avenue.

I work in the Longwood Medical Area of Boston. 150 years ago, the entire area was fens and cow pastures. Now it includes Beth Israel Deaconess, Harvard Medical School, Brigham and Women's, Dana Farber, Joslin, Merck, and millions of square feet of research buildings. It also includes all the cars of the folks who work in these institutions, commuting on a two lane former cow path called Longwood Avenue.Most of the year, I simply walk the mile between my Harvard office and CareGroup office. Walking a mile takes me 15 minutes. Driving a mile down Longwood can take 45 minutes.

I truly believe that the only solution to the traffic problem around the Longwood Medical Area is to eliminate cars completely. Longwood Avenue could be converted to a monorail track or even better a Point to Point Transportation System.

What's that you ask? Check out Skyweb Express

This technology, which does have a working alpha site, is a personalized mass transit "people mover" that runs continuously from the point of origin to the point of destination. It gets cars off the road, runs with greater energy efficiency than cars, and provides safe transportation for each passenger, taking them directly to their desired stop without having to stop needlessly along the way.

Since Boston has its share of cold, wet, and snowy weather, it's unrealistic to assume patients, clinicians and researchers will walk from point to point year round. Providing personalized mass transit such as the Skyweb Express would save time, energy, and frustration. Imagine the cost savings to the local economy of reducing 30 minutes from the commutes of the thousands of highly paid people working in the Longwood area.

At the moment, the various Boston Mass Transit agencies - MBTA or "T" and the Turnpike are all burdened by debt and unbalanced budgets, so change in the short term is unikely. However, as Longwood becomes increasingly "Manhattanized" with skyscraper research buildings, it's clear that commuting by car will soon become impossible and we'll need to investigate transportation technologies like SkyWeb. Hence it's the cool technology of the week.

Thursday, September 18, 2008

Geocaching

In my blogs I've described the wide variety of places I've explored while climbing, hiking, and traveling.

In my blogs I've described the wide variety of places I've explored while climbing, hiking, and traveling.One of the best ways to explore the roads less traveled where you live is geocaching.

The concept of geocaching is simple - finding a hidden treasure using GPS coordinates.

It all started with Letterboxing in Dartmoor, England in 1854 when James Perrott placed a bottle for visitors cards at Cranmere Pool on the northern moor. Hikers on the moors began to leave a letter or postcard inside a box along the trail hence the name "letterboxing". The next person to discover the site would collect the postcards and mail them. The first Dartmoor letterboxes were so remote and well-hidden that only the most determined walkers ended up finding them.

Letterboxing used trail descriptions and orienteering. With the advent of the modern GPS, the journey to finding the hidden treasure now begins with latitude and longitude.

What's in a Geocache? Most often the treasures consist of various collectibles, hot wheels, McDonald's toys and local souvenirs. The idea is that you leave something in the cache and take something. It's also common to leave in a note a visitors register often included in the cache.

Sometimes caches are accessed by hiking, sometimes by climbing, sometimes by kayaking. Inevitably, they are placed in beautiful and special places.

My daughter and I have placed several over the years including one on an island in Boston Harbor.

There are currently 654,684 active geocaches around the world, including one of mine.

Grab a GPS and give it a try!

Wednesday, September 17, 2008

A new website for BIDMC

As part of our 2008 strategic plan, BIDMC has completely replaced its external website. This project was a collaboration of Corporate Communications and Information Systems, along with a number of vendor partners.

The success of the project depended upon several factors, given the complexity of the task and the need for coordination among all the stakeholders. Here are some lessons learned:

1. Gather requirements using a formal process - Corporate Communications created a vision of a useful, consumer-friendly, highly interactive web site, then retained a vendor, BigBad, to help implement the vision. They ran focus groups of all the intended users, interviewed departments throughout the hospital to understand complex and varied goals, and engaged with information systems to understand infrastructure/security/integration issues. The end result was a comprehensive requirements document with broad support from all stakeholders.

2. Create wireframes based on requirements - Before a single line of code is written, having a 'graphical storyboard' of how the site will be organized and how all the requirements will be addressed functionally is key. A wireframe diagram is a kind of roadmap that lays out the site so that everyone can assess its usability, features, and scope.

3. Establish clear roles and responsibilities - The division of labor for the project was that Corporate Communications managed the project and was responsible for all content, including multimedia, and workflow for the project. Information Systems provided hosting/security/databases including multiple environments for staging/testing/production. BigBad ran the requirements definition process, created the wireframes, and assisted with selection of the content management system (SiteCore, backed by a Google Appliance for search)

4. Create single points of project management and communication - Each of our teams (Corp Comm, IS, BigBad) appointed a single point of contact for communication. We did not expect Corp Comm to contact the network team about DNS issues or the server team about performance issues. The single point of contact in each group was responsible for broadly communicating all issues and resolving all problems by engaging all necessary staff within each group.

5. Embrace new technologies - The site includes a limited amount of Flash, a significant amount of streaming video (hosted by Brightcove external to the BIDMC network), blogs, chat, interactive maps, ecommerce, cascading style sheets to enforce a common look/feel/navigation paradigm throughout the site, and a medical encyclopedia. We've used these new technologies appropriately, realizing that our users have different needs - some may want to find out the basics of navigating BIDMC, while others may want to use social networking features.

6. Make it work in all browsers and operating systems - We support Firefox, IE, Chrome, Opera, Safari, etc. running anywhere on anything.

7. Test it thoroughly - we went live in staging and then went live to the public at a new URL to enable testing internal and external to our network before redirecting our old site to the new site.

8. Formulate a migration strategy - we mapped the main features of the old site to the new site to minimize error 404's i.e. folks using bookmarks or searching Google would still be able to get to their information because of 250 different directory level redirects built into an ISAPI filter running on the old site. This makes the transition easier for all stakeholders.

9. Engage the Community - To keep the content of the website fresh, Corp Communications trained over 120 delegated content managers who serve as the editors/publishers for each department and division of the hospital. Delegated content management ensures local departmental content needs are met, but an enterprise content management system and governance ensures total site consistency.

10. Overcommunicate – there were weekly standing meetings, constant communication via our project managers, and conference calls to rapidly resolve issues. We ensured that hospital leadership was informed of the status every step of the way and explained the transition in numerous newsletters, blogs, posters, emails, and presentations to the entire community.

The external website project at BIDMC has been a great collaboration that required unique governance, trust among multiple project participants, and boundless energy by all.

The success of the project depended upon several factors, given the complexity of the task and the need for coordination among all the stakeholders. Here are some lessons learned:

1. Gather requirements using a formal process - Corporate Communications created a vision of a useful, consumer-friendly, highly interactive web site, then retained a vendor, BigBad, to help implement the vision. They ran focus groups of all the intended users, interviewed departments throughout the hospital to understand complex and varied goals, and engaged with information systems to understand infrastructure/security/integration issues. The end result was a comprehensive requirements document with broad support from all stakeholders.

2. Create wireframes based on requirements - Before a single line of code is written, having a 'graphical storyboard' of how the site will be organized and how all the requirements will be addressed functionally is key. A wireframe diagram is a kind of roadmap that lays out the site so that everyone can assess its usability, features, and scope.

3. Establish clear roles and responsibilities - The division of labor for the project was that Corporate Communications managed the project and was responsible for all content, including multimedia, and workflow for the project. Information Systems provided hosting/security/databases including multiple environments for staging/testing/production. BigBad ran the requirements definition process, created the wireframes, and assisted with selection of the content management system (SiteCore, backed by a Google Appliance for search)

4. Create single points of project management and communication - Each of our teams (Corp Comm, IS, BigBad) appointed a single point of contact for communication. We did not expect Corp Comm to contact the network team about DNS issues or the server team about performance issues. The single point of contact in each group was responsible for broadly communicating all issues and resolving all problems by engaging all necessary staff within each group.

5. Embrace new technologies - The site includes a limited amount of Flash, a significant amount of streaming video (hosted by Brightcove external to the BIDMC network), blogs, chat, interactive maps, ecommerce, cascading style sheets to enforce a common look/feel/navigation paradigm throughout the site, and a medical encyclopedia. We've used these new technologies appropriately, realizing that our users have different needs - some may want to find out the basics of navigating BIDMC, while others may want to use social networking features.

6. Make it work in all browsers and operating systems - We support Firefox, IE, Chrome, Opera, Safari, etc. running anywhere on anything.

7. Test it thoroughly - we went live in staging and then went live to the public at a new URL to enable testing internal and external to our network before redirecting our old site to the new site.

8. Formulate a migration strategy - we mapped the main features of the old site to the new site to minimize error 404's i.e. folks using bookmarks or searching Google would still be able to get to their information because of 250 different directory level redirects built into an ISAPI filter running on the old site. This makes the transition easier for all stakeholders.

9. Engage the Community - To keep the content of the website fresh, Corp Communications trained over 120 delegated content managers who serve as the editors/publishers for each department and division of the hospital. Delegated content management ensures local departmental content needs are met, but an enterprise content management system and governance ensures total site consistency.

10. Overcommunicate – there were weekly standing meetings, constant communication via our project managers, and conference calls to rapidly resolve issues. We ensured that hospital leadership was informed of the status every step of the way and explained the transition in numerous newsletters, blogs, posters, emails, and presentations to the entire community.

The external website project at BIDMC has been a great collaboration that required unique governance, trust among multiple project participants, and boundless energy by all.

Tuesday, September 16, 2008

The Era of Disposable Printers

A year ago, I purchased a Hewlett Packard OfficeJet Pro K550 for my home. It's a networked printer that enables any computer in my household to easily print via IP.

A year ago, I purchased a Hewlett Packard OfficeJet Pro K550 for my home. It's a networked printer that enables any computer in my household to easily print via IP.We actually do not print very often - we're a near paperless household, so the printer only gets about 1000 pages a year of activity.

It turns out that the HP K550 has a few design deficiencies that have been described on the web. If the printer is not used frequently, the print heads clog and need to be replaced.

Last week, I tried to print a document and the printer failed with an unrecoverable error condition. Per the manual, the HP website, and various online forums, the recommendation was to power down the printer, disconnect all network cables, and then reboot everything after a few minutes.

That did not solve the problem, so the next step was to purchase new printer cartridges and print heads.

Numerous people have had this problem as illustrated by this customer complaint website

I stopped by Staples and found that printheads for the OfficeJet Pro series are $70 each and you need 2 - $140 in print heads.

A suite of ink cartridges include $38 for black and $27 each for Cyan, Magenta and Yellow - that's $120.

Thus, a replacement for the clogged printhead and ink is $260.00

The HP K550 was replaced by the HP K5400 to correct the design defects. A brand new HP K5400 with a full set of new print heads and new cartridges is $149.00 (see photo above). The print capability of the new cartridges is over 1000 pages.

Hmmm - should I pay $260.00 to repair my old printer or pay $149.00 to get a new one. In fact, every year when I need to replace my ink cartridges, why bother - just buy a new printer. With the Staples printer rebate (the HP K5400 is $125.00 today), it's actually cheaper to buy a new printer rather than to buy cartridges.

I understand the economics of this. It's a bit like the Gillette razor - give the razor away and commit every shaver to a lifetime of Gillette blade purchases.

However, the environmental impact of tossing a few razor handles is far less than disposing of a printer yearly.

Being an environmentally responsible person, I purchased the $260 of print heads and ink to repair my old printer. I installed them, followed every online recommendation and found that the HPK550 could not be salvaged.

The great news is that the K5400 uses the same cartridges, so I purchased a new printer. I now have a fully functional K5400 and two years of ink cartridges.

My old K550 went into equipment recycling at the Wellesley RDF.

It's truly twisted economics when it's cheaper to dispose of your printer yearly than to buy supplies. I think back on my beloved HP LaserJet II that printed perfectly, lasted a decade, and had affordable supplies.

Maybe as the cost of energy and raw materials increases, HP will try a greener approach and make high quality, long lasting printers, with environmentally sound ink cartridges that encourage recycling and reuse rather than yearly printer disposal.

Monday, September 15, 2008

The Impact of Lean Times on IT Organizations

Times are tough in the US right now. Energy prices are high, investment banks are crumbling, real estate prices are tumbling.

Recessions hurt. Unemployment increases, anxiety escalates, and people suffer.

What about the impact on IT organizations?

To me, lean times require every company to re-examine itself and validate its priorities. Capital and operating budget processes separate the 'nice to have' from the 'must have'. Discretionary projects are deferred.

Since I have strong belief in IT governance, which suggests that IT strategy should be perfectly aligned with the overall strategy of the company, I welcome this kind of corporate introspection which results in a very specific list of high priority projects.

I would much rather have a short list of high priority, high business value projects that focus on the basics than a list of ambiguous, speculative projects driven by politics.

Lean times enable the IT organization to catch up, ensure its own processes are optimized, and realign its work with business owners.

There is the risk that resource constraints will tip the IT organization from 'lean and mean' to 'bony and angry' but ideally, appropriate governance process will align expectations and resource availability so that lean budgets yield realistic expectations of what can be done with existing resources.

Despite the doom and gloom every night on CNN, I would offer the optimistic suggestion that lean times are a positive experience for IT organizations, creating accountability for doing a few new projects really well.

Given the economies of scale that often happen with centralized IT infrastructure and well coordinated enterprise application projects, lean times can also reduce the number of competitive departmental/local efforts. As I've said previously, there will always be a balance between central and local IT, which is good, but taking the time to carefully plan enterprise resource allocation instead of simply reacting to evolving local efforts is optimal.

Yes, people are hurt by an economic downturn and I will not minimize the negative aspects of recessions. However, I feel that 2009 will be a great year for all my organizations to ensure that our IT priorities are the specific projects needed to ensure the stability of the business in lean times.

Recessions hurt. Unemployment increases, anxiety escalates, and people suffer.

What about the impact on IT organizations?

To me, lean times require every company to re-examine itself and validate its priorities. Capital and operating budget processes separate the 'nice to have' from the 'must have'. Discretionary projects are deferred.

Since I have strong belief in IT governance, which suggests that IT strategy should be perfectly aligned with the overall strategy of the company, I welcome this kind of corporate introspection which results in a very specific list of high priority projects.

I would much rather have a short list of high priority, high business value projects that focus on the basics than a list of ambiguous, speculative projects driven by politics.

Lean times enable the IT organization to catch up, ensure its own processes are optimized, and realign its work with business owners.

There is the risk that resource constraints will tip the IT organization from 'lean and mean' to 'bony and angry' but ideally, appropriate governance process will align expectations and resource availability so that lean budgets yield realistic expectations of what can be done with existing resources.

Despite the doom and gloom every night on CNN, I would offer the optimistic suggestion that lean times are a positive experience for IT organizations, creating accountability for doing a few new projects really well.

Given the economies of scale that often happen with centralized IT infrastructure and well coordinated enterprise application projects, lean times can also reduce the number of competitive departmental/local efforts. As I've said previously, there will always be a balance between central and local IT, which is good, but taking the time to carefully plan enterprise resource allocation instead of simply reacting to evolving local efforts is optimal.

Yes, people are hurt by an economic downturn and I will not minimize the negative aspects of recessions. However, I feel that 2009 will be a great year for all my organizations to ensure that our IT priorities are the specific projects needed to ensure the stability of the business in lean times.

Friday, September 12, 2008

Cool Technology of the Week

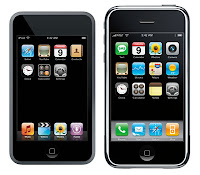

In the past, I've been called an Apple fanboy, despite the fact that I declared in a recent blog that the iPhone 3G is not a perfect device.

In the past, I've been called an Apple fanboy, despite the fact that I declared in a recent blog that the iPhone 3G is not a perfect device.At Harvard Medical School, we've been evaluating mobile devices for education and clinical care. From our early surveys, it's clear that Palm OS is dying, Windows Mobile Smartphones are fading, and that iPhone and Blackberry are the smartphones of choice.

The iPhone has two downsides - you have to use AT&T, meaning that you may have to switch networks just to get an iPhone. Also, they keyboard does not lend itself to high volume email input.

We have publicly available WiFi throughout the medical school and throughout the HMS affiliate hospitals. This gives us an interesting possibility for the mobile clinical data viewing and educational device of the future - the iPod Touch, which is my Cool Technology of the Week.

You may think I'm going out on a limb, declaring the iPod Touch a game changing device, but I have my reasons.

1. The iPod Touch is basically an iPhone 3G without the need for an expensive AT&T 2G/3G phone/data plan. The applications are the same (everying at the AppStore including ePocrates), the email functionality is the same, and the user interface is the same. This means that anyone can use all the application functionality of an iPhone 3G without any connectivity cost by just buying an iPod Touch and using WiFi.

2. The web browsing, media management, and content viewing of the iPod Touch are remarkable. My complaint with the iPhone - the challenging keyboard for entry - becomes less of an issue if you're using the device for viewing data, webpages, and media.

3. Students have declared that they are no longer buying Palm OS or Windows devices. The iPhone and the iPod are their multimedia devices of choice.

4. We've prototyped using several of our web-based applications on the iPod Touch. They work perfectly as long as they do not require significant typing/data input.

5. The form factor of the device fits in a white coat pocket, weighs under a pound and the battery life lasts a shift.

Thus, I believe the iPod Touch is a device to watch for clinical and educational applications. I suspect it will be used in many novel ways in healthcare and not just for as a glorified music player.

Thursday, September 11, 2008

Preserving Summer's Bounty

As a vegan committed to green living, I'm a big fan of community supported agriculture and eating foods grown regionally. (Ok, I have to make an exception for Green Tea). Eating regionally year round is no problem if you live and work in San Francisco but it's really challenging if you live in New England where the ground is frozen from November to May.

As a vegan committed to green living, I'm a big fan of community supported agriculture and eating foods grown regionally. (Ok, I have to make an exception for Green Tea). Eating regionally year round is no problem if you live and work in San Francisco but it's really challenging if you live in New England where the ground is frozen from November to May.How does a committed locavore (that's the new lingo per the Oxford English Dictionary for a regional food patriot) thrive in New England?

The answer is to preserve the summer's bounty. Over the past few weeks, my wife and I have been harvesting the beets, turnips, eggplant, cucumbers, corn, tomatoes, kale, beans, squash, peppers, and carrots from our home garden (photo above).

The techniques we're using are more 1700's than 21st century. No herbicides, pesticides, or chemical fertilizers in the garden. Preservation through low sodium pickling, canning and preparing root vegetables for a winter in the root cellar so that we'll have regional foods for months to come.

Here are a few of our favorite ways to preserve regional foods:

Basic Refrigerator pickles

Sweet pickles

Dill pickles

Low sodium pickles

Asian pickles

Canning

Freezing

In my Dreaming of Green blog entry, I described my lifelong journey to incrementally reduce my carbon footprint and create a simpler, more sustainable lifestyle. This year, our efforts with community supported agriculture and our own garden are helping us iteratively improve our regional food commitments.

I look forward to the day when the refrigerator and pantry is stocked with only regional foods, eaten fresh from June to October and eaten preserved from November to May. We've already eliminated all animal products from the household, now the next step is to reduce our reliance on pre-packaged and commercial sources of food. No more midwinter fresh fruits from Chile or lettuce flown 4000 miles.

It's a journey that will take many years, but our efforts to reduce/recycle/reuse, eat regionally, and reduce dependency on commercial sources may result in enough discipline so that our retirement lifestyle can be carbon neutral, off grid and as supportive of regional producers as possible.

Wednesday, September 10, 2008

Electronic Prescriptions for Controlled Substances

I've recently been asked about the timeline for the DEA to support the electronic prescribing of controlled substances. The prohibition against prescribing controlled substances is a significant barrier to the adoption of e-Prescribing since it requires a separate workflow to write for Lipitor verses Librium.

The DEA has published a notice of proposed rulemaking (NPRM) and offered a comment period until September 25. The DEA has not specified the timeframe for implementation or next steps after the comment period.

In general, the NPRM describes the requirements for the use of electronic systems to create, sign, dispense and archive controlled substance prescriptions.

From reading the NPRM, it is clear that the DEA has framed the issue around law enforcement, which is appropriate given the mission of the DEA:

"These regulations provide pharmacies, hospitals, and practitioners with the ability to use modern technology for controlled substance prescriptions while maintaining the closed system of controls on controlled substances dispensing; additionally, the proposed regulations would reduce paperwork for DEA registrants who prescribe or dispense controlled substances and have the potential to reduce prescription forgery."

The NPRM contains a description of the business processes required to ensure

1. authentication of the prescriber

2. non-repudiation of the prescription

3. integrity of the record keeping process

Each practitioner must have their identity verified through an in-person identity proofing process before they can use an electronic system to prescribe controlled substances. Entities that may conduct in-person identity proofing of a prescriber include:

1. The credentialing office of a DEA-registered hospital;

2. The State professional licensing Board or State controlled substance authority

that authorized the practitioner to prescribe controlled substances; or

3. A State or local law enforcement office.

In order for a prescriber to access the system and write electronic prescriptions, the practitioner must authenticate using a two-factor authentication process, which means using something that you have (a smart card, token or thumb drive containing a digital certificate) plus something that you know (a strong password). This process will have to be used each time the practitioner wants to sign a controlled substance prescription.

Other requirements include:

1. A two minute timeout on the e-prescribing application, requiring two factor re-authentication to return to the e-prescribing screens after timeout

2. For each prescription, the provider must "check a box" confirming the patient's name, the drug being prescribed, the dosage, the applicable DEA number, and a statement indicating that the practitioner understands that he has reviewed the prescription information and intends to sign and authorize the prescription being transmitted.

3. The prescription must be transmitted immediately and cannot be printed in the future if it was transmitted electronically

4. The eRx system must generate a log of all controlled substance prescriptions which the provider must review monthly. Logs must be kept for 5 years

5. Electronic prescriptions of controlled substances cannot be converted to non-electronic form, such as faxes, at any time.

Given the impact of the NPRM on providers, pharmacies, intermediaries (such as Surescripts/Rxhub) and vendors, I am sure there will be many comments made before September 25. Check out the testimony of Paul L. Uhrig, EVP Corporate Development,

General Counsel, & Chief Privacy Officer of Surescripts/RxHub. After the comment period closes, I would guess that we'll have a year before a final rule is published. One the one hand, I want to accelerate e-prescribing by creating a seamless electronic workflow for all medications. On the other, I am not looking forward to supporting tokens, smartcards, and other forms of two factor authentication.

The DEA has published a notice of proposed rulemaking (NPRM) and offered a comment period until September 25. The DEA has not specified the timeframe for implementation or next steps after the comment period.

In general, the NPRM describes the requirements for the use of electronic systems to create, sign, dispense and archive controlled substance prescriptions.

From reading the NPRM, it is clear that the DEA has framed the issue around law enforcement, which is appropriate given the mission of the DEA:

"These regulations provide pharmacies, hospitals, and practitioners with the ability to use modern technology for controlled substance prescriptions while maintaining the closed system of controls on controlled substances dispensing; additionally, the proposed regulations would reduce paperwork for DEA registrants who prescribe or dispense controlled substances and have the potential to reduce prescription forgery."

The NPRM contains a description of the business processes required to ensure

1. authentication of the prescriber

2. non-repudiation of the prescription

3. integrity of the record keeping process

Each practitioner must have their identity verified through an in-person identity proofing process before they can use an electronic system to prescribe controlled substances. Entities that may conduct in-person identity proofing of a prescriber include:

1. The credentialing office of a DEA-registered hospital;

2. The State professional licensing Board or State controlled substance authority

that authorized the practitioner to prescribe controlled substances; or

3. A State or local law enforcement office.

In order for a prescriber to access the system and write electronic prescriptions, the practitioner must authenticate using a two-factor authentication process, which means using something that you have (a smart card, token or thumb drive containing a digital certificate) plus something that you know (a strong password). This process will have to be used each time the practitioner wants to sign a controlled substance prescription.

Other requirements include:

1. A two minute timeout on the e-prescribing application, requiring two factor re-authentication to return to the e-prescribing screens after timeout

2. For each prescription, the provider must "check a box" confirming the patient's name, the drug being prescribed, the dosage, the applicable DEA number, and a statement indicating that the practitioner understands that he has reviewed the prescription information and intends to sign and authorize the prescription being transmitted.

3. The prescription must be transmitted immediately and cannot be printed in the future if it was transmitted electronically

4. The eRx system must generate a log of all controlled substance prescriptions which the provider must review monthly. Logs must be kept for 5 years

5. Electronic prescriptions of controlled substances cannot be converted to non-electronic form, such as faxes, at any time.

Given the impact of the NPRM on providers, pharmacies, intermediaries (such as Surescripts/Rxhub) and vendors, I am sure there will be many comments made before September 25. Check out the testimony of Paul L. Uhrig, EVP Corporate Development,

General Counsel, & Chief Privacy Officer of Surescripts/RxHub. After the comment period closes, I would guess that we'll have a year before a final rule is published. One the one hand, I want to accelerate e-prescribing by creating a seamless electronic workflow for all medications. On the other, I am not looking forward to supporting tokens, smartcards, and other forms of two factor authentication.

Tuesday, September 9, 2008

Delayed and Embargoed Results on PatientSite

Today I was asked about the way we display results in our personal health records and the way we transmit results to personal health record services like Google Health and Microsoft Healthvault.

In general we share everything with the patient immediately, since it is the patient's data.

However, from our 7 years of supporting personal health records through Patientsite, we've learned that some news is best communicated in person between doctor and patient i.e. do you want to find out you have cancer on the web or via a thoughtful discussion with your doctor?

Here are the reports we delay to enable discussion between doctor and patient to occur first. In the case of HIV testing, since special consents are required, we do not show the results in Patientsite at all.

CT Scans (used to stage cancer) 4 days

PET Scans (used to stage cancer) 4 days

Cytology results (used to diagnose cancer) 2 weeks

Pathology reports (used to diagnose cancer) 2 weeks

HIV DIAGNOSTIC TESTS: Never shown

• Bone Marrow Transplant screen, including

HIV-1, HIV,2 Antibody

HTLV-I, HTLV-II Antibody’

NHIV (Nucleic Acid Amplification to HIV-I

• HIV-1 DNA PCR, Qualitative

• HIV-2, Western Blot. Includes these results:

HIV-2 AB, EIA

HIV-2, Western Blot

• HIV-1 Antibody Confirmation. Includes these results:

Western Blot

Anti-P24

Anti-GP41

Anti-GP120/160

I hope these rules help others who are implementing personal health records. We want the patient to own and be the steward of their own data, but we also want to support the patient/doctor relationship and the notion that bad news is best communicated in person.

In general we share everything with the patient immediately, since it is the patient's data.

However, from our 7 years of supporting personal health records through Patientsite, we've learned that some news is best communicated in person between doctor and patient i.e. do you want to find out you have cancer on the web or via a thoughtful discussion with your doctor?

Here are the reports we delay to enable discussion between doctor and patient to occur first. In the case of HIV testing, since special consents are required, we do not show the results in Patientsite at all.

CT Scans (used to stage cancer) 4 days

PET Scans (used to stage cancer) 4 days

Cytology results (used to diagnose cancer) 2 weeks

Pathology reports (used to diagnose cancer) 2 weeks

HIV DIAGNOSTIC TESTS: Never shown

• Bone Marrow Transplant screen, including

HIV-1, HIV,2 Antibody

HTLV-I, HTLV-II Antibody’

NHIV (Nucleic Acid Amplification to HIV-I

• HIV-1 DNA PCR, Qualitative

• HIV-2, Western Blot. Includes these results:

HIV-2 AB, EIA

HIV-2, Western Blot

• HIV-1 Antibody Confirmation. Includes these results:

Western Blot

Anti-P24

Anti-GP41